I see many developers designing their queues in a very inefficient way. What do I mean? Most developers use one queue and several workers to process everything. That looks simple, but it’s actually a bad design.

Why?

Because different jobs have different processing times.

- Some jobs are fast (200ms)

- Some are slow (10 seconds+)

Putting these in the same queue causes performance issues. Now imagine the fast job is important, while the slow job is not important—if the slow job enters the queue first, the fast and important job must wait behind it.

This is how real-time systems accidentally break their SLA.

The Problem in Code (How Most Queues Are Built)

type Job struct {

Type string

Payload string

}

var queue = make(chan Job, 10000)

func worker() {

for job := range queue {

switch job.Type {

case "send_email":

time.Sleep(3 * time.Second) // slow

case "real_time_event":

time.Sleep(10 * time.Millisecond) // fast

case "batch_report":

time.Sleep(20 * time.Second) // very slow

}

}

}

func main() {

go worker()

// Everything is dumped into one queue

queue <- Job{Type: "real_time_event"}

queue <- Job{Type: "send_email"}

queue <- Job{Type: "batch_report"}

}In this design, all jobs wait behind each other, regardless of importance or urgency. A 20-second report may block a real-time event that should have finished in milliseconds.

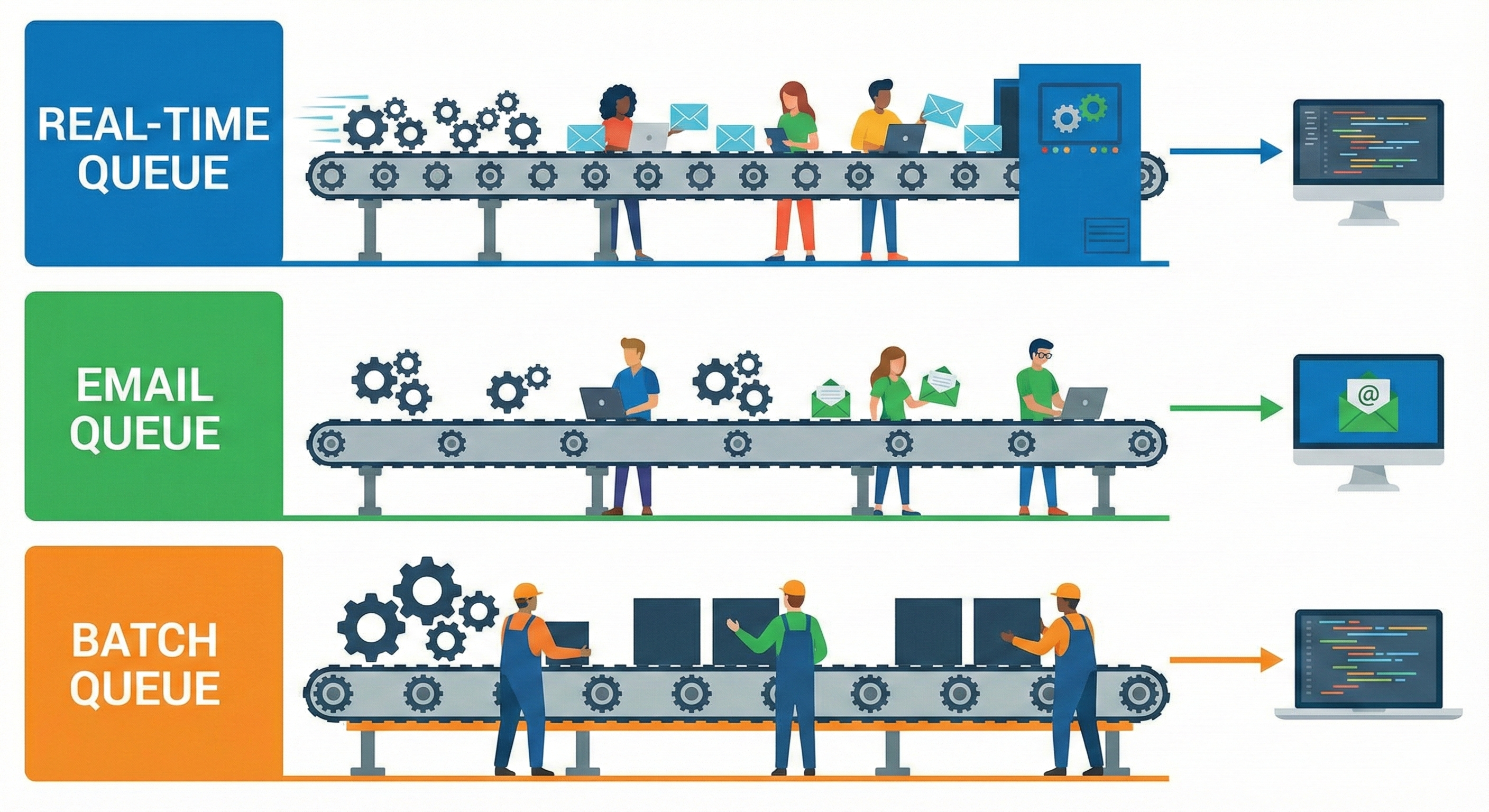

The Fix: Categorize Queues by SLA

We solve this problem by creating separate queues with separate workers based on how fast each task needs to be processed.

type Job struct {

Payload string

}

// Categorized queues

var (

notificationQueue = make(chan Job, 100) // very fast (real-time)

emailQueue = make(chan Job, 500) // medium (emails)

reportQueue = make(chan Job, 5000) // slow / batch jobs

)

// REAL-TIME WORKER (notifications)

func notificationWorker() {

for job := range notificationQueue {

sendPushNotification(job)

}

}

// MEDIUM WORKER (emails)

func emailWorker() {

for job := range emailQueue {

sendEmail(job)

}

}

// BATCH WORKER (monthly reports)

func reportWorker() {

for job := range reportQueue {

generateReport(job)

}

}

func main() {

// Start workers according to urgency

for i := 0; i < 10; i++ { go notificationWorker() }

for i := 0; i < 5; i++ { go emailWorker() }

for i := 0; i < 2; i++ { go reportWorker() }

// Routing jobs by SLA

pushNotification(Job{Payload: "User logged in"})

pushEmail(Job{Payload: "Welcome email"})

pushReport(Job{Payload: "Monthly financial report"})

}

func pushNotification(j Job) { notificationQueue <- j }

func pushEmail(j Job) { emailQueue <- j }

func pushReport(j Job) { reportQueue <- j }Why this works

- Real-time events have more workers because they must be processed immediately.

- Emails have moderate workers because timing is flexible.

- Batch reports have fewer workers because they are slow, resource-heavy, and not urgent.

This structure prevents slow jobs from blocking critical jobs.

Conclusion

Most queueing problems in backend systems are not caused by code bugs—they’re caused by poor queue design. When you mix fast, slow, important, and unimportant jobs in a single queue, you create delays, timeouts, and SLA violations.

The solution is simple:

- Separate jobs by speed and importance.

- Give each queue its own concurrency.

- Avoid letting slow tasks block real-time tasks.

This small architectural change dramatically increases system reliability and performance. Mastering this pattern is essential for building scalable, production-ready backend systems.